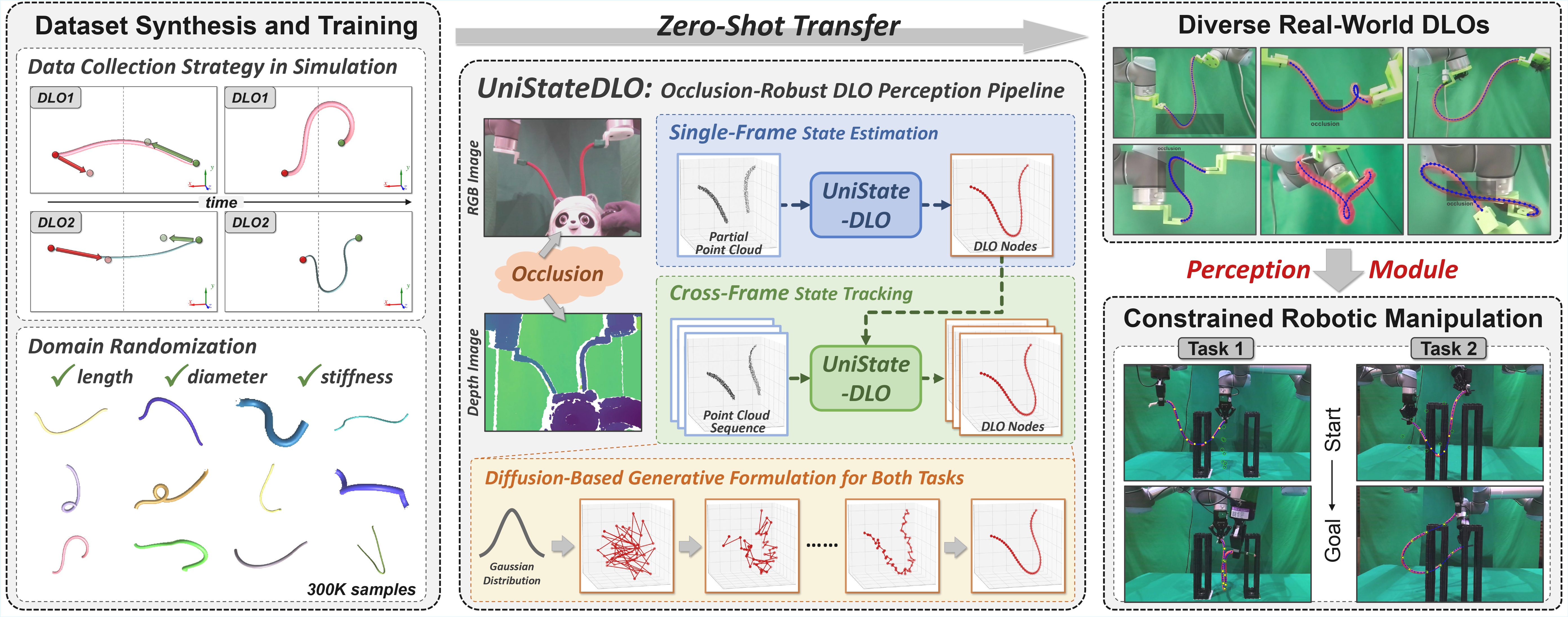

Perception of deformable linear objects (DLOs), such as cables, ropes, and wires, is fundamental to downstream robotic manipulation. Despite extensive progress, vision-based perception remains highly vulnerable to occlusions arising from obstacles and large deformations. Moreover, the high dimensionality of the state space, the lack of distinctive visual features, and the presence of sensor noises further compound the challenges of reliable DLO perception. To address these open issues, this paper presents UniStateDLO, the first complete DLO perception pipeline with deep-learning methods that achieves robust performance under severe occlusion, covering both single-frame state estimation and cross-frame state tracking from partial point clouds. Both tasks are formulated as conditional generative problems, leveraging the strong capability of diffusion models to capture the complex mapping between highly partial observations and high-dimensional DLO states. Trained solely on large-scale synthetic data, UniStateDLO achieves strong data efficiency by enabling zero-shot sim-to-real generalization without any real-world training data. Comprehensive simulation and real-world experiments demonstrate that UniStateDLO outperforms all state-of-the-art baselines in both estimation and tracking, producing globally smooth yet locally precise DLO state predictions in real time, even under substantial occlusions. Integration into a closed-loop DLO manipulation system further validates its ability to support stable feedback control in complex, constrained 3-D environments.

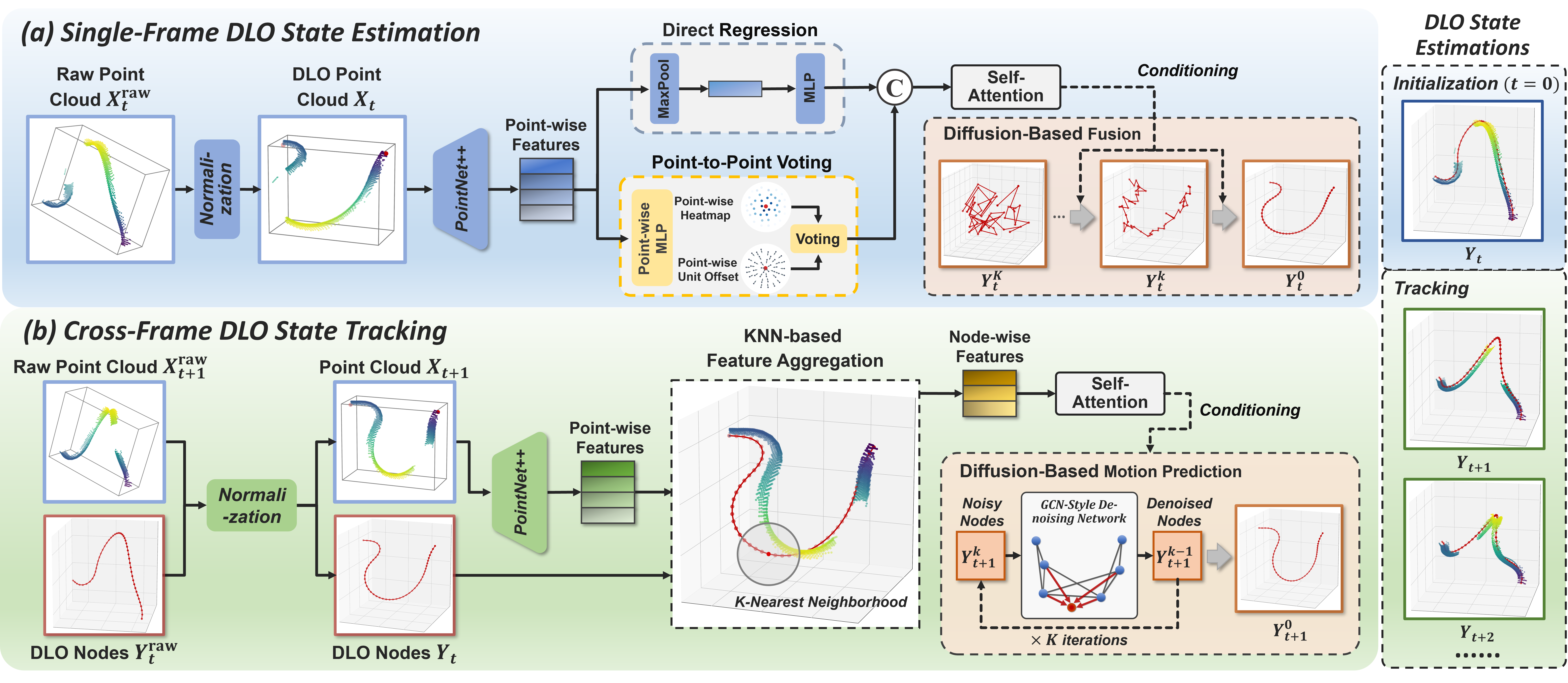

Overview of the proposed UniStateDLO pipeline, comprising Single-Frame State Estimation for initialization and Cross-Frame State Tracking for sequential motion tracking. Given a partial DLO point cloud, state estimation module first produces coarse predictions through two complementary branches based on PointNet++ features, and then refines them via a diffusion model. For cross-frame tracking, a KNN-based feature aggregation module extracts node-wise local features around the previous frame's predictions, followed by another diffusion model to infer per-node cross-frame motion.

The DLOs are randomly deformed to generate a dataset of 300K samples only in simulation, where the simulator is based on Unity3D engine in combination with the Obi Rope package. The lengths, diameters, stiffness of DLOs and camera viewpoints are randomized.

The UniStateDLO model trained on the synthetic dataset can be directly applied on diverse real-world DLOs without collecting any realistic data or re-training. All inference is performed in real time on a single NVIDIA RTX 4090 GPU, where the single-frame estimation stage runs at on average 94.19 ms/frame and cross-frame tracking at 89.35 ms/frame.

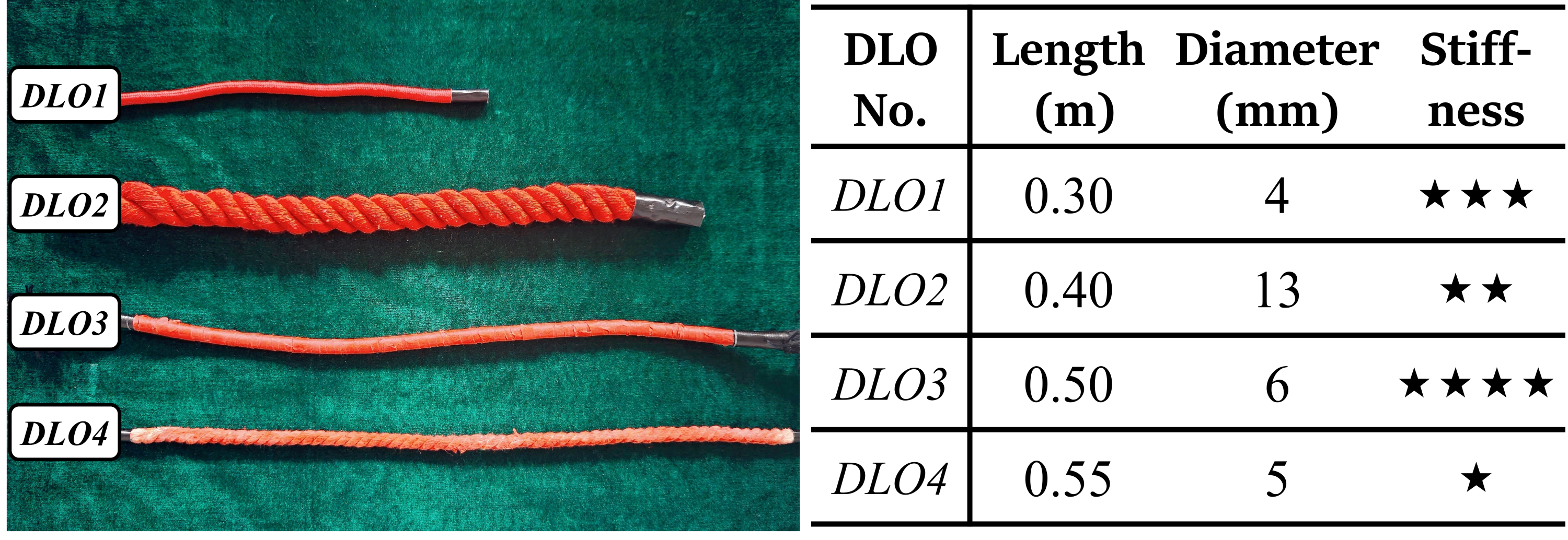

We use four DLOs with distinct materials and physical properties to evaluate the real-world generalization performance of proposed UniStateDLO.

Quanlitative comparison with single-frame estimation baselines. (Use the left and right buttons to switch between different cases)

More visualized cases of real-world state estimations achieved by UniStateDLO.

Quanlitative comparison with cross-frame tracking baselines.

Tracking performance on a long-term DLO motion sequence under dynamic, severe occlusions, and large-scale deformation.

Tracking performance on a long-term DLO motion sequence under dynamic, severe occlusions, and large-scale deformation.

A dual-arm robot rigidly grasps the two ends of a DLO and manipulates it toward a desired 3-D configuration,

where continuous collision avoidance and occlusion-robust perception is required.

The proposed UniStateDLO serves as

the front-end perception module, which provides real-time feedback for the downstream controller.

Accurate state estimation and tracking with occlusions in initial states, supporting to manipulate the DLO to desired shape while avoiding obstacles.

Reliable during intermediate stage of manipulation, finally reaching complex configurations with self-intersections.

Robust under large-scale occlusion, even when one endpoint of DLO becomes completely invisible for an extended period.